Learning Multi-Layer Latent Variable Model via Variational Optimization of Short Run MCMC for Approximate Inference

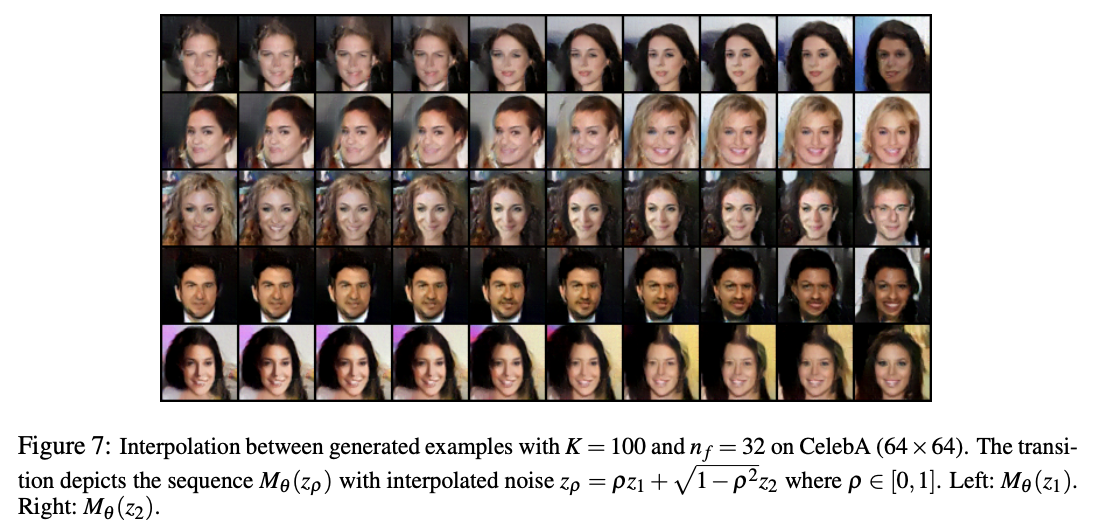

Short-Run MCMC interpolation between synthesized examples

Short-Run MCMC interpolation between synthesized examplesI contributed to this paper, submitted to CVPR, while advised by Dr. Song-Chun Zhu at the Center for Vision, Cognition, Learning, and Autonomy at UCLA.

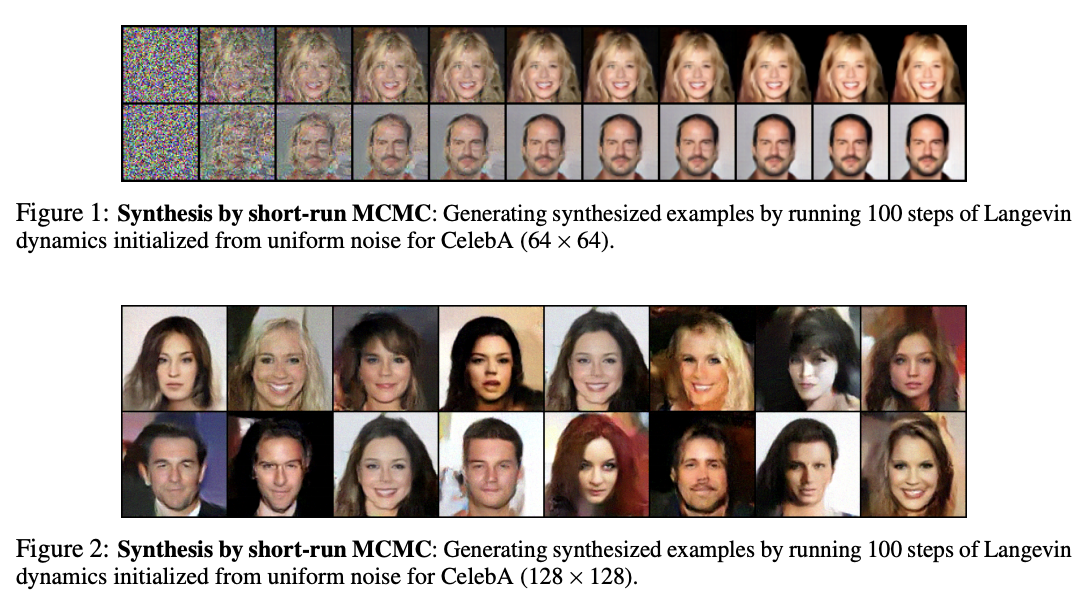

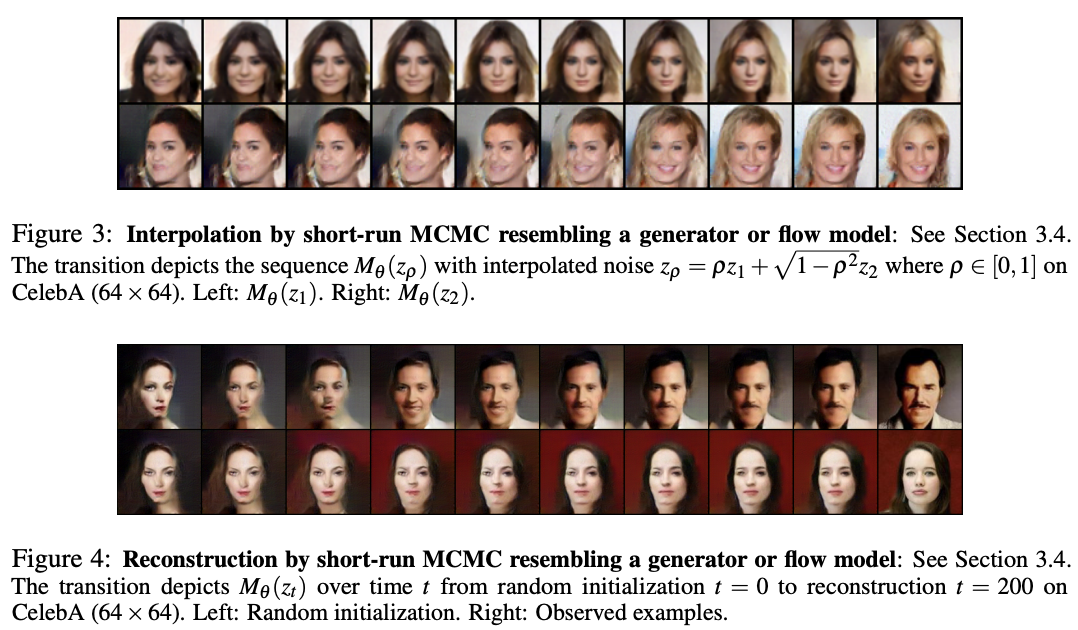

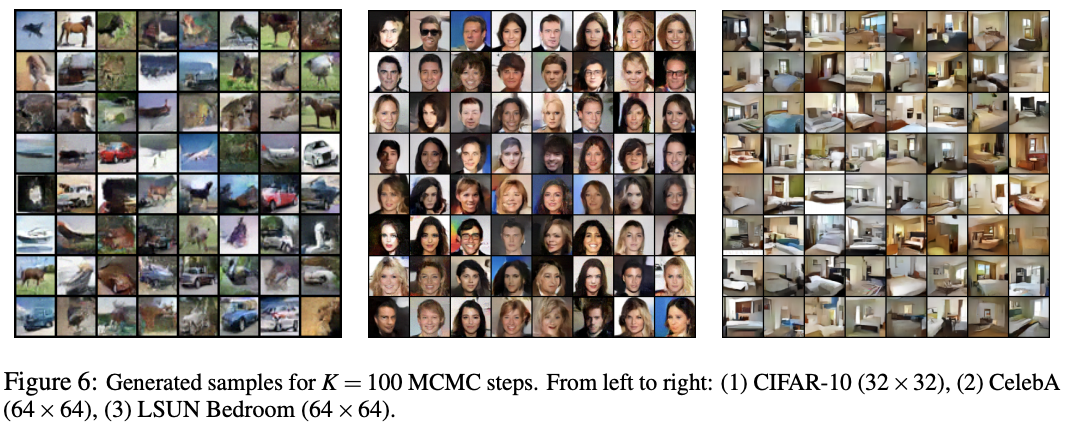

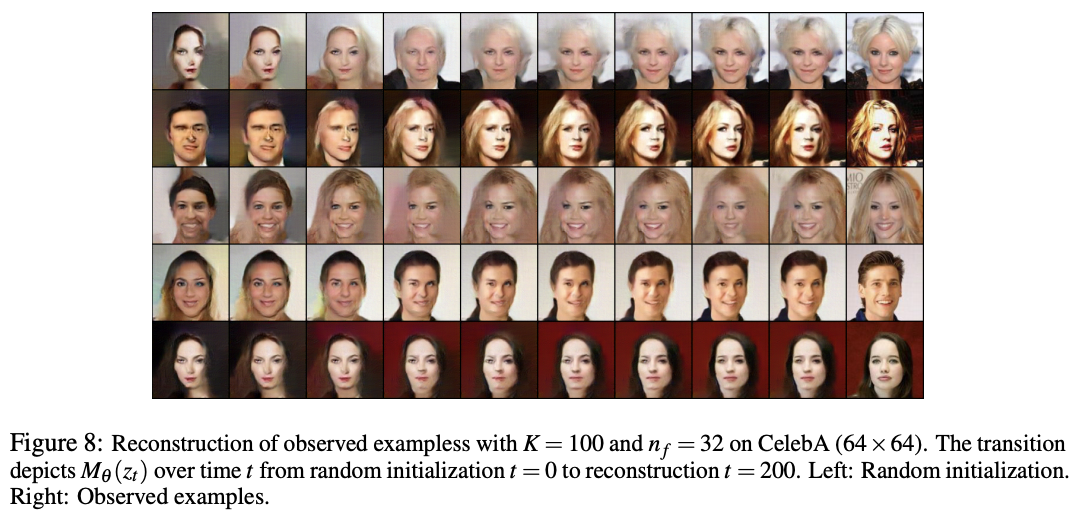

The short-run Markov Chain Monte Carlo model can be considered a valid generative model just like a variational autoencoder (VAE) or a generative adversarial network (GAN). We use it to synthesize, interpolate, and reconstruct images. Image synthesis is performed by running Langevin dynamics from a uniform noise distribution, and the interpolation and reconstruction results rival or are qualitatively better than a VAE and GAN.

A presentation I gave about a related paper is below.